How SGE might (not) impact your organic traffic

Google's SGE is a topic we cover regularly in our weekly SEO newsletter. The newsletter itself is only available in German, but since this particular article seems to be highly relevant to the whole SEO community, we decided to publish a translated English version.

The week before I wrote about the criticism of the framework in question, I reported on newly released features of SGE and ended with the following words:

"We need to be vigilant, but cannot focus too much on things in the future rather than the present, because it is unclear when and even if the SGE will be rolled out in Germany."

And just a couple of days later I came across this article that almost made me fall off my chair. 🪑

A framework to predict the impact of Google's SGE was presented and promised answers to the following questions:

"If and when Google SGE goes live, how will it impact organic traffic?

Will our traffic drop, and if so, by how much?

And what can we do about it?"

To me, this sounded like clickbait.

The basis of the framework

The framework uses simplifying assumptions (my emoji signals if I agree or disagree with these assumptions):

- ✅ "If your page ranks in the snapshot carousel, you will get (some) organic traffic."

- ❌ "Traffic from the snapshot carousel will be equal to or less than the same rank on Google today."

- ❌ "If you don't rank in the SGE snapshot carousel, but you do rank in the traditional 10 blue links, you won't get any traffic (this is a conservative assumption; in reality, some users will probably scroll past SGE and click the blue links)."

- 🚧 "If the SGE is not active, you will rank the same as you do today."

Assumption 1 sounds plausible. If and how much traffic will come from the carousel boxes or the source links needs to be determined though.

Assumption 2 I find difficult, because only informational keywords were considered. Many in E-Commerce SEO, including myself, predict that there will be many chances for product pages to gain more traffic and to be more important compared to the current situation.

Assumption 3 is directly relativized in the article itself, because it is unrealistic. In this case, the model has directly invalidated itself.

Assumption 4 also needs to be viewed with skepticism. If the author wants to say "When there is no AI Overview'' then I agree. If not, I disagree because Google is really invested in SGE and chances are high there will soon be no Google Search without the SGE integration that lets you choose "I want SGE" or "I don't want SGE".

What I just mentioned in one sentence needs to be highlighted:

"Our study focused on websites in the technology industry, with traffic mainly from informational keywords."

The results can definitely not be generalized.

More complaining

The "actual rank" is supposed to indicate whether a page appeared in an AI overview or not and on which position. The article describes it like this:

- Optimistic rank: "This is the best rank seen. For example, if you checked three times and ranked 3, 5, and one time didn't rank at all, the optimistic rank is 3."

- Pessimistic rank: "The pessimistic rank is taken as 'not ranking' if the page wasn't ranking in even one observation. If the page is ranking in all of them, we take the average. In the example above, the pessimistic rank is 'not ranking'."

This is oversimplified. I get it, you have to start somewhere and someway. But there is a reason why we SEOs use professional tools that track our keywords over long time periods. The author mentions to observe a keyword and URL ranking 3 times to determine the optimistic or pessimistic rank, but does not describe WHEN you do that. I can do that 3 times a day or in a week. The results will be very different.

How would the observations compare if the samples were taken once per week? This is important information, which is needed to predict how organic traffic will develop over time.

In addition to the actual rank, there are also assumptions about the potential CTR changes. The current CTR values are used to determine an optimistic (all 3 initially visible carousel slides get the same amount of traffic) and a pessimistic (half of the optimistic CTR) prediction, to simulate potential changes.

To assume that all 3 positions will get the same amount of traffic is very unrealistic. What is even more important: At the time of the publication of the article Google already introduced sources inside the AI overview links (via small drop down arrows) which were not considered for this framework to have any effect.

How are keywords selected?

The instructions state to choose a small keyword set (20%) that accounts for a big amount of organic traffic (80%). That sounds reasonable. However for large websites, or websites that diversify their traffic across many different keywords or URLs, this will not work.

Away from all the assumptions and simplifications: This model is not scalable. It is always about manual observations and that is no longer efficiently feasible above a certain page size.

How meaningful are the results?

Just as I almost fell off my chair while reading the article, the person who wanted to know how big the impact of the SGE could be, would probably fall off as well.

According to examples mentioned in the article, some extreme cases of a -30% traffic loss, as well as a potential +220% gain in organic traffic (for the same example) are possible. That range is huge and shows that this framework is rather less useful. Especially considering that the potential results are based on a small sample size of 3 observations that are used for the actual rank.

The SGE is extremely volatile. If there is one important thing to mention, it is this one.

Here are a few examples how the AI overview changed for two queries over the last few weeks:

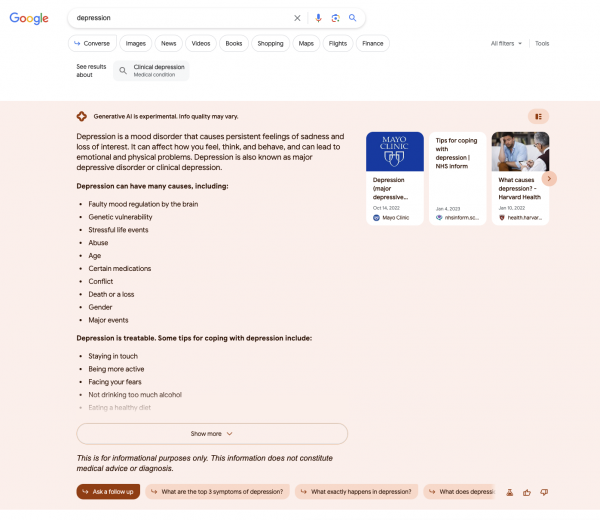

Example 1: Depression (YMYL)

Date: 2023/08/26

Date: 2023/08/26

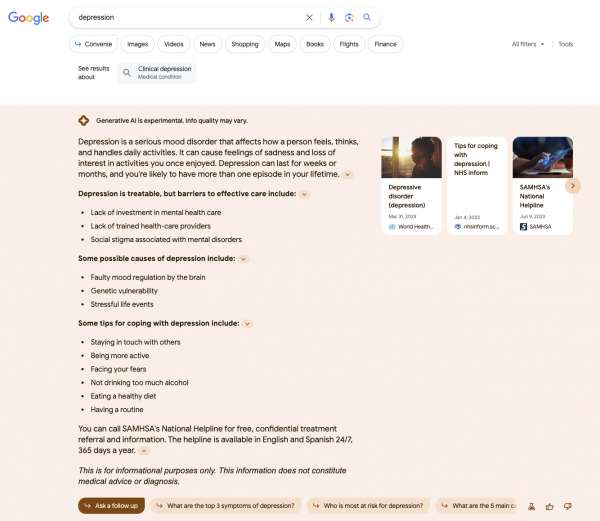

Date: 2023/09/11

Date: 2023/09/11

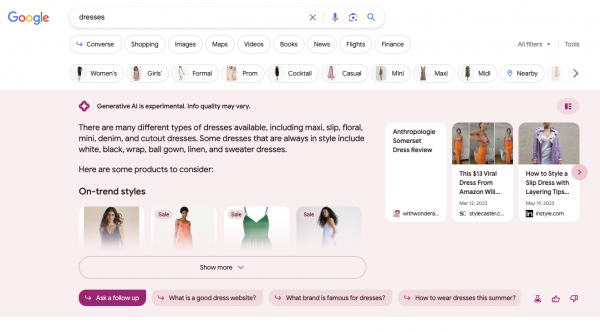

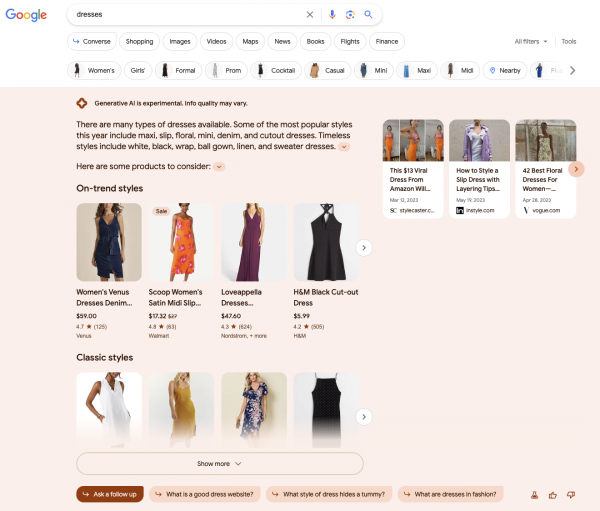

Example 2: Dresses (E-Commerce Category)

Date: 2023/08/26

Date: 2023/08/26

Date: 2023/09/11

Date: 2023/09/11

It's obvious: The AI overviews can differ. Sometimes more, sometimes less. The first example query "depression" changed noticeably, first focussing on a long list of possible causes followed by tips of how to cope. The second version empathizes the treatability of depression, then listing only a few possible causes before going into detail about how to cope and also providing a helpline to get in touch with somebody right away if needed. Also, the sources that are directly visible are not the same.

Even more drastic are the changes in the SGE answer for dresses. While the sources are similar (not its position though), the snapshot is much bigger (almost twice as big). If a website is not in the AI overview of the second dresses example, the assumption for a drastic traffic loss would be much more potent. It's also interesting to note that the images for dresses show the models faces in one instance but not the other.

There is more!

At the end, possible experiments and interventions were suggested and demonstrated - or not, because "it's a secret".

Case studies are very exciting if they are well prepared. But only if the results are real. Here, the author shows anonymized customer data and the possible effects on their websites, which are now to be prevented or gained by him and his agency's "measures".

The SGE is not officially released in regular Google Search. Currently it is a test in the USA and was made available to India and Japan at the end of August 2023 (also as a test). That's it. Since the announcement of SGE, a lot of things have changed. A few examples:

- Source links are now integrated within the generated text (the carousel boxes were not as recognizable being the sources of the generated answer)

- For some queries, the SGE didn't generate a reply at all (for some time it was impossible for me to generate AI overviews for politicians)

- Local results can show a 3-5 pack

Even the case studies provided feature one result that predicts a potential loss of -31% organic traffic and a possible gain of +97%. Predictions like that are too extreme for my liking.

What did I observe?

I've followed everything related to the SGE since its announcement. I regularly check a defined keyword set. What sounds like magic in the article (= secret interventions that cannot be named or explained), is actually very similar to what we already did with featured snippets in the past:

- Check what keywords have a featured snippet

- How long/short is the snippet?

- How is the snippet formatted?

- Where in the HTML document is the text of the featured snippet (e.g. at the top, bottom, etc.)?

A site can appear in the carousel box of the AI overview if your site features the information that is provided in the AI overview (search intent). Just like with featured snippets, there is a lot (and as described even more) of volatility with this. The same AI overview changes multiple times a day.

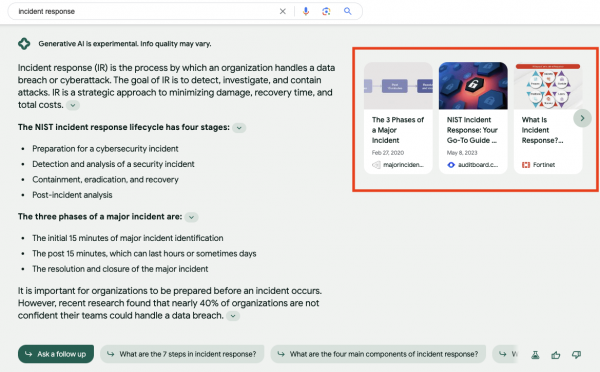

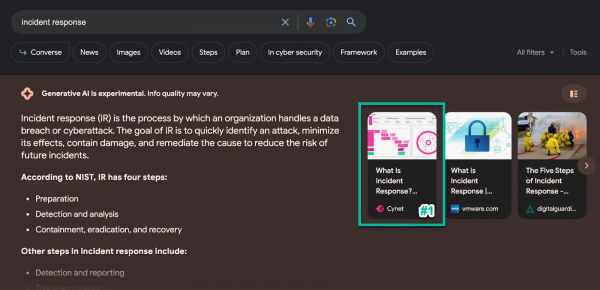

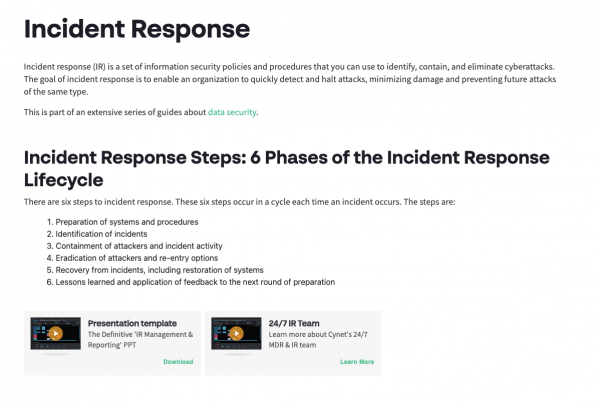

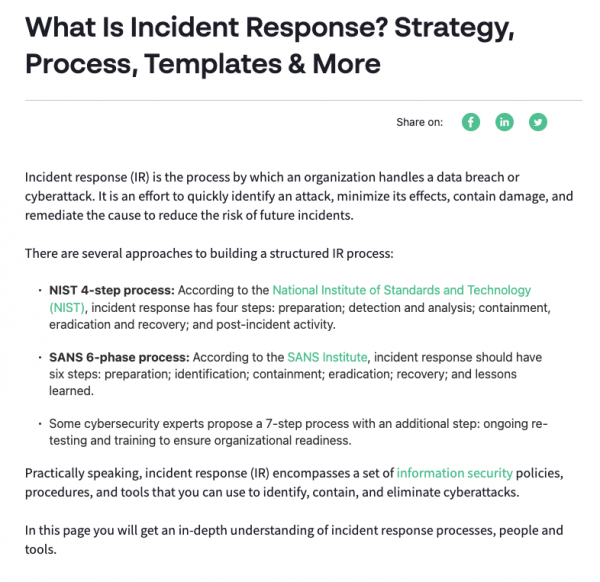

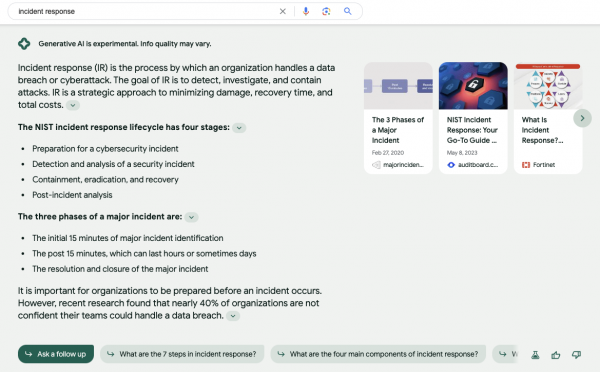

The article shows a specific example of a customer that ranked position 1 in the carousel box. The query was "incident response". It's funny that the AI overview in the example mentions 3 steps, while there are 4 bullet points. Anyways, take a look:

This is what the customer's URL for incident response used to look like in the past (start of the page, checked via Wayback Machine)

After the changes it looks like this (again, the start of the page):

The site was changed so that it's more fitting for the informational intent behind the query (content was slightly altered, headlines were rewritten and the different incident response processes were mentioned by name). Before, the article also had a short definition, but was lacking the names of the two processes NIST and SANS, as well as both parts, the definition and the process(es), being split up into different sections.

At the time of writing this article the AI overview looks quite different once again. Cynet does not appear on position 1 inside the overview and is not cited in any of the source links.

My final take

At the beginning I quoted myself and I want to get back to the essence of that:

- Be vigilant and follow SGE developments

- Think about the potential risk for your organic traffic and your most important keywords

- Try to come up with potential interventions that could prevent a traffic loss or favor a traffic gain

- If you are from the US, India or Japan: Try to get into the AI overview yourself and test what works and what does not work for your website

- BUT: Don't make yourself go crazy over frameworks such as the one mentioned in the article

The framework ignores other keyword types (like transactional, local, etc.). Of course, you can develop your own framework as it is mentioned in the article. But the one that is described and that could act as your template is not scalable and works under unrealistic assumptions that have to be seen critically before even trying it out.

We don't know yet if, when exactly and in which shape or form the SGE will officially be rolled out. Especially for EU countries, as it took some time for Bard to be available in Germany for example. The same applies to new SERP features in the US. Usually not every country gets everything (at the same time).

What we need instead of a framework to predict the unpredictable is a constant tracking of SGE AI overviews for our most important keywords. We would also need to measure the following:

- If/How a website ranks in organic search (we already have this information)

- If/How a website ranks inside the AI overview

- How long it ranks there

- How often a website is cited inside the AI overview (besides being featured in the carousel box)

- How the position inside the carousel box changes

But even that is not enough, because there are ads (and according to Google we love ads). And we need to know which queries cannot even generate an AI overview, because they either don't generate it automatically, only after being prompted to click or don't have this feature at all. I observed this for recipe queries for example.

Which SEO tool provider will be able to integrate any SGE functionality first, once it is rolled out? And what does traffic data from SGE look like? Will we even get those numbers? There are a lot of things we could try to predict after all. 🔮

If you have your own theories, feel free to contact us for a chat.